From emotion to animation

A long time ago, most of the cartoons and animated movies were made with months and sometimes years of work to create realistic characters. Nowadays, computer graphics have become more realistic than ever, and computer-generated imagery (CGI) creates wonders in your favorite movies. Computer vision field includes facial motion capture and facial animation, which makes these characters as vivid and live as real ones. So, let’s dive into the mystery of how it’s done.

Facial motion capture records and converts person’s movements into a digital database, using different scanners or cameras. From that database we can produce various applications of computer-animated characters. So, how does that database look like? It consists of various coordinates and relative positions of different reference points on the actor’s face. For example, MPEG-4 standard includes standardized feature points which are used for various purposes like expression tracking. These feature animation points are a set of parameters that represent a complete set of facial actions, with motion, head, eye and mouth control as well, i.e. each facial animation point is an action that somehow deforms a face model, differing from its neutral state. This tracking can be two-dimensional, and even three-dimensional using multi-camera rigs or laser marker systems.

It may seem that facial motion capture is a bit trivial in comparison to body motion capture. On the contrary, here we face a greater challenge since this procedure, especially facial expression tracking, requires very high resolutions to detect and consequently track subtle human expressions regarding eye and lip movement. Lie To Me heroes would have done a better job if only they used computer facial tracking techniques, filters and algorithms! That’s the secret why Elsa from Frozen moves realistically, or why your World of Warcraft characters can really act like you. The motion detection captures the movements of real people, and therefore these characters have more nuanced animation than in manual animation.

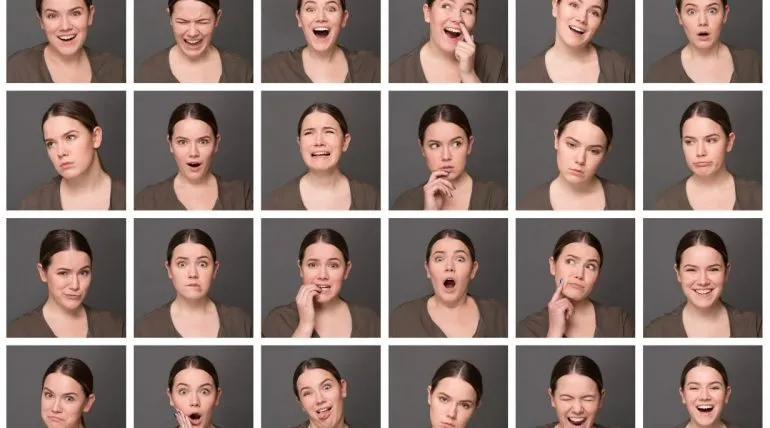

Movements like these require delicate algorithms since they are often in the range of a few millimeters in size, so facial expression capture creates a detailed output, which can be in concordance with facial motion capture to create a complete tracking system of facial expressions and movements. A part of computer vision application in this field involves emotion tracking and mood tracking, which uses facial tracking inputs to recognize specific emotions and movements, that can be used further in detecting moods and emotions from various images and videos.

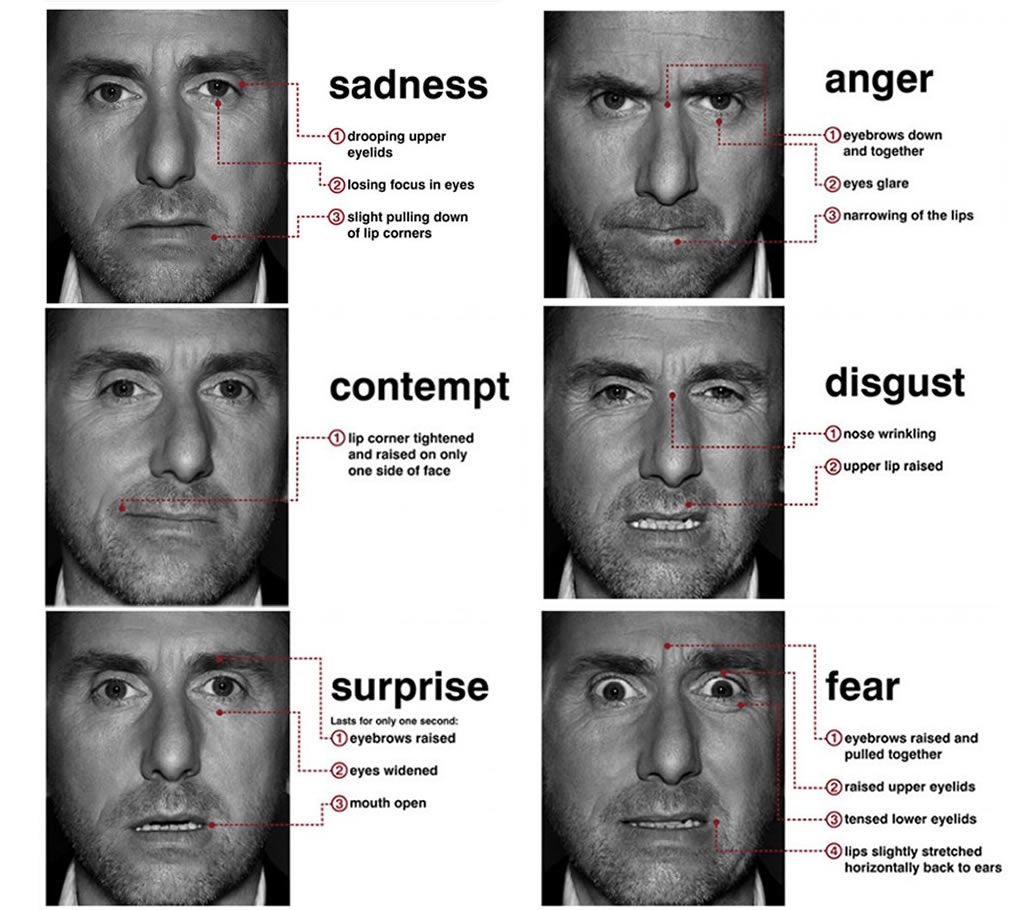

The analysis of human facial expression is not a novelty, even Charles Darwin, the founder of the theory of evolution, made significant progress with his book The Expression of the Emotions in Men and Animals. Emotions tracking can be of use in different cultural studies, since anthropologists agree on diverse showing of the same emotions among different cultures. For example, in various studies some eastern people were reluctant to express their true motions in presence of an authority figure, even though the tracker tracked the same facial expressions as with their American and European counterparts in a prior study where an authority figure was not present. Ekman and Friesen found in their study that there are universal six emotions in humans: happiness, sadness, anger, surprise, disgust, and fear. These emotions, found in every culture, have come to be known as prototypic or basic expressions, and this is the basis of modern facial and expression tracking. It continues to be improved since people use hundreds of emotions on a daily basis (unless you’re Steven Seagal!), so computer vision research tries to capture even the subtlest emotions which can be tracked on a human face.

Computer facial animation uses these data to create animated humans, animals or even imaginary creatures, which is applied not only in cinematographic industry, but in education, scientific simulation and communication as well. Technological advancements made improvements in facial animation, so it’s being created at runtime as well. Either way, soon face tracking techniques won’t need Pinocchio’s nose to detect lies – your subtle facial expressions could show it all.

_______________

References:

- Visage Technologies’ MPEG-4 Face and Body Animation (MPEG-4 FBA): An Overview

- Bailenson et al. (2008): “Real-time classification of evoked emotions using facial feature tracking and physiological responses”, in Int. J. Human-Computer Studies 66: 303–317.

- Bettadapura, Vinay (2012): Face Expression Recognition and Analysis:The State of the Art, at: arxiv.org

- Ekman, Paul and Wallace Friesen (1971): “Constants across cultures in the face and emotion”, in: Journal of Personality and Social Psychology 17.2: 124–129.