Facial emotion recognition: A complete guide

In our everyday interactions, much of what we convey isn’t expressed through words alone. Our facial expressions, gestures, and other non-verbal cues play a significant part in communication.

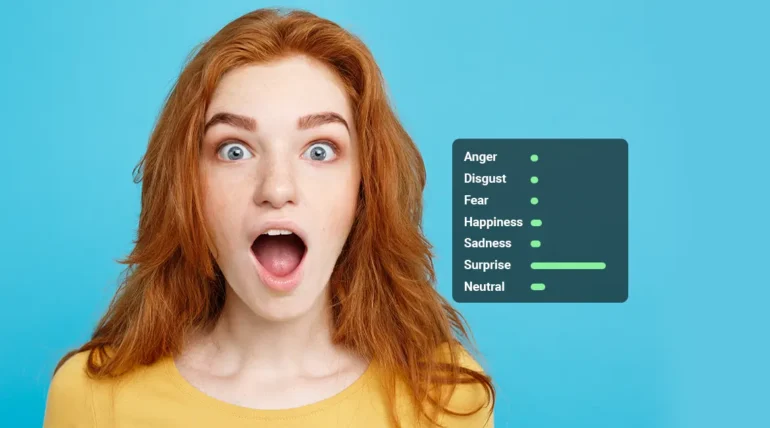

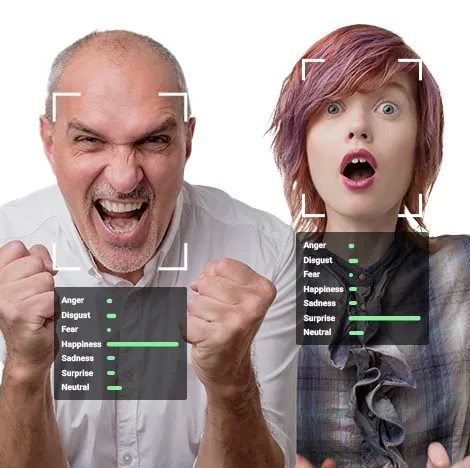

Facial emotion recognition, also known as facial expression recognition (FER), is the technology used to detect and interpret the emotions conveyed by facial expressions. Using the power of machine learning, it captures those non-verbal subtleties that can sometimes speak louder than words, helping us decode the complete message and underlying meaning.

In this blog, we will provide an overview of this technology and its current applications across industries, covering today’s challenges and future directions.

How facial emotion recognition works: a high-level overview

According to Paul Ekman, emotions can be inferred from facial expressions. For example, frowning, tightening of the eyes and raised lips indicate anger. Therefore, by analyzing facial expressions we can deduce how people feel.

Traditionally, facial emotion recognition relies on detecting changes in facial action units. This relates to identifying particular activations of facial muscles, like an eyebrow raise or a jaw drop. These activations, or changes in facial action units (AUs), serve as the building blocks for recognizing emotional states.

AU-based algorithms work like this:

- Face detection: The first step is to detect the face within an image or video frame.

- Action unit identification: The algorithm identifies specific action units (AUs) present in the facial expression. For example, raised eyebrows might correspond to AU1, while an open mouth could be AU25.

- Emotion classification: Using the combination of active AUs, the algorithm makes a conclusion about the corresponding emotion. For example, when it detects raised eyebrows combined with an open mouth, it recognizes surprise as the emotion.

However, our emotion recognition software works differently. It directly identifies emotions from the face without relying on action units. Here’s how it works:

- Face detection: When detecting the face, the algorithm extracts meaningful features from it, capturing essential information about its structure and expression.

- Emotion classification: Using a pre-trained model, it immediately classifies the face into one of several emotional categories (e.g., happy, sad, surprised). The model has learned from tens of thousands of examples with various facial expressions, allowing it to recognize emotions without first having to specify which AUs are active.

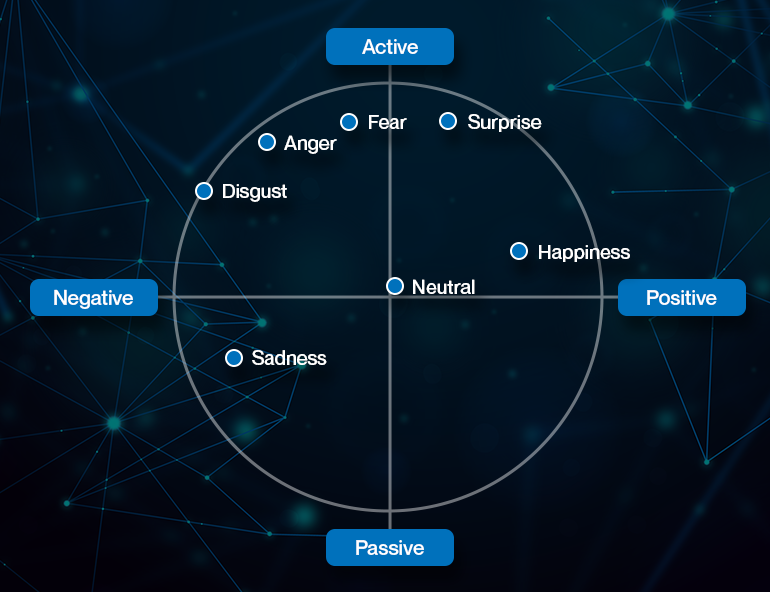

Valence and arousal

The concepts of emotional valence and arousal are important in emotion recognition technology to classify the intensity and type of emotion. Emotional valence describes whether an emotion is positive or negative, while arousal refers to its intensity or associated energy level.

For example, anger is an emotion with negative valence but high arousal, as it involves the activation of various muscles and physiological responses. In contrast, sadness has a negative valence but can range from low to high arousal levels.

Depending on the use case and its potential risk factors, it can be beneficial to cover as much variance as possible. For example, you would typically need a lot more nuance when analyzing emotions of a person behind the wheels of a freight truck than the one playing video games at home. Adding variance includes incorporating multiple types of inputs and modalities (multi-modal emotion recognition), like:

- Physiological changes: Strong emotions often elicit visible physiological responses in body language, such as the fight-or-flight response or rest-and-digest states. These can be detected and linked to particular emotions.

- Voice & language analysis: Voice characteristics, such as volume or energy, or language tone can provide additional insights into a person’s emotional state.

- Biometrics: With information like heart rate, we can also detect emotional states.

Emotion recognition systems can also be tailored to meet specific requirements, enhancing their versatility and applicability. Parameters can be customized based on the specific requirements of the task. For example, different discrete emotions can be added if labels are available, such as distress. Besides valence and arousal, other dimensions like dominance can also be tracked.

Driven by algorithms

Machine learning algorithms are the driving force behind FER systems. We generally divide them into two categories: single-image systems and those that analyze sequential data.

- Single image systems: These are particularly adept in scenarios where emotional expressions are vivid and unmistakable, like a photograph that captures a radiant smile or a pronounced scowl. The starkness of the emotion in a single frame provides a clear signal for the system to analyze.

- Systems that process sequential data: These benefit from the dynamic nature of data, such as audio recordings or video streams. The continuous stream of information offers more context, enhancing the ability to evaluate emotional shifts over time. This is especially true for emotions that unfold or intensify gradually, which are better captured in sequences rather than static images.

Machine learning plays a pivotal role in the growing field of affective computing (emotion AI), dedicated to developing systems capable of recognizing emotions to interact with humans (human-computer interaction).

Moreover, vision transformers, an advanced class of machine learning models, are increasingly being used for their ability to handle complex patterns in visual data.

Supervised by humans

Just as important as machines, humans are essential to FER systems. They help ensure that the emotion recognition models are trained on high-quality, diverse, and accurately labeled datasets.

Here’s an overview of their responsibilities:

- Data labeling: Annotators examine images or videos and label them with the corresponding emotions. This involves identifying the emotion displayed by the subjects in the visual data, which can range from basic emotions like happiness and sadness to more complex states like confusion or surprise.

- Preparing training data: The labeled data serves as training material for machine learning models. Data scientists and other specialists ensure that the data is accurately labeled so that algorithms can learn to recognize and predict emotions correctly.

- Quality control: QC specialists help maintain the quality of the datasets. They review the data for consistency and accuracy, which is essential for the reliability of the FER system.

- Cultural sensitivity: Since facial expressions and their perception can vary across cultures, experts often work within specific cultural contexts to ensure that the FER system is sensitive to these nuances.

- Addressing data imbalance: Labeling a diverse range of emotions helps prevent bias in the FER system. For example, certain emotions may be underrepresented in the data, and human experts help rectify this by providing more examples of these emotions.

- Enhancing model robustness: By providing a wide variety of labeled data, data scientists and engineers work together to create robust models that can perform well across different demographics and in various lighting and environmental conditions.

Industry use cases

Understanding customer emotions is becoming crucial for businesses to improve their offerings and personalize customer experience. These are some of the main use cases:

Automotive industry

The automotive industry is using FER to enhance driver safety and comfort. Emotion recognition systems in vehicles can detect a driver’s mood and alertness, adjusting safety mechanisms accordingly.

For example, if a driver shows signs of drowsiness or stress, the system can issue alerts or even take control to prevent potential accidents. This technology is a critical component in the development of smart cars and autonomous vehicles.

Healthcare

In the healthcare industry, this technology enables the monitoring of patients’ emotional states, providing valuable data for treatment plans. Its purpose can be to detect pain, monitor patients’ health status, or identify symptoms of illnesses.

Additionally, it can be used for analyzing the relationship between facial expressions and psychological health, with the potential to aid in diagnosing and treating disorders like autism.

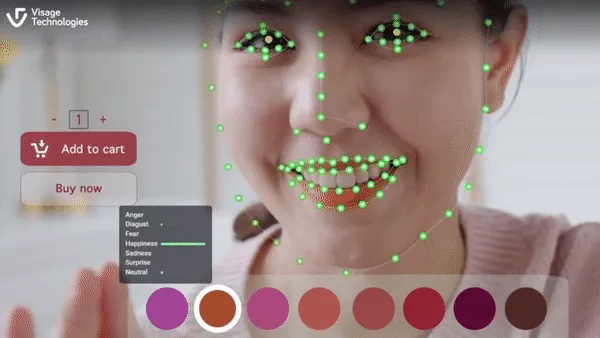

Retail and marketing

Companies use emotion detection tools to analyze consumer sentiments about products. For example, virtual try-on apps can benefit from emotion recognition by capturing the user’s response to different makeup looks or hairstyles. This feedback can help brands recommend products that customers are likely to enjoy and purchase.

For market research, emotion detection tools can be used for real-time analysis of viewer reactions to ads, allowing advertisers to optimize content for emotional engagement and tailor advertising to the viewer’s emotional state.

Banking and financial services (BFSI)

In the BFSI industry, facial emotion recognition can be used to improve customer service interactions. By identifying customers’ emotional states, customer service representatives can better prioritize complaints and address them more efficiently.

For example, a customer who’s showing signs of frustration or anger can get priority over a customer who’s calmer. This can help improve customer satisfaction and reduce the number of complaints that have escalated.

Gaming and entertainment

Game developers can create immersive experiences by integrating emotion recognition into their games. For example, a game could adapt its storyline, difficulty, or character interactions based on the player’s facial expressions, detected through a webcam.

This opens up new possibilities for interactive storytelling and personalized gameplay. The technology’s lightweight nature and offline functionality ensure a seamless integration into games without compromising performance.

Social robotics

From being caregivers for the elderly to customer service representatives, emotionally intelligent robots can find its use in various situations. They are able to engage with humans in a more natural and empathetic manner, opening up new possibilities for human-computer interaction and communication.

Challenges and trends

While machine learning models have made significant advances, they still face limitations similar to human judgment. In other words: just as humans can misinterpret emotions, machines can too.

For example, if 300 people were asked to complete a simple recognition task, e.g., to identify whether an image is of a panda or not, some errors would inevitably occur. The same image might be interpreted differently depending on the context.

Some of the main challenges in implementing FER include:

- Data inconsistency: Achieving consistent labeling of emotions is difficult due to the subjective nature of human expression. Multiple annotators are often needed to determine an average label for emotional states.

- Variability in expression: Human emotions are expressed and perceived in a wide variety of ways, making it challenging for FER systems to accurately recognize and interpret these expressions.

- Age factors: The age appearance of individuals can influence emotion recognition, as models may be limited to recognizing only the emotions for certain demographics they were trained on, e.g., specific age groups, genders, or ethnicities.

- Mood vs. emotion: It’s important to differentiate between mood and emotion for effective recognition. Emotions are intense, short-lived responses, while moods are less intense but more enduring. New models are now capable of detecting subtle mood variations, which is critical for applications like vehicle operation for safety reasons.

- Environmental impact: Factors such as lighting can greatly affect the performance of FER systems. Controlling these variables is crucial for accurate emotion detection in both datasets and practical applications.

Despite these challenges, most datasets report high accuracy rates, often in the high 80s and 90s. For instance, our emotion recognition software boasts an accuracy of 88%. This level of performance is a testament to the advancements in computing power and algorithmic sophistication.

There’s a growing trend towards making emotion recognition systems as multimodal as possible, an approach that enhances robustness and accuracy. Ethical considerations are also becoming increasingly important, especially in workplace implementations. The EU’s AI Act, for instance, highlights the need for ethical guidelines to ensure responsible use.

Emotion recognition is growing and becoming more nuanced

To sum up, emotion recognition is a growing field with immense potential. It bridges the gap between incredibly varied human expressiveness and exact computational analysis, helping us better understand the role of emotions in overall communication.

According to Deloitte, the emotion detection and recognition market is projected to reach $37 billion by 2026. The post-pandemic period has seen a particular surge, driven by an increase in connected devices, like smartwatches and VR headsets. By collecting physiological data—heart rate, skin conductance, body temperature—these input-capturing devices can also be used to deduce emotional states. However, the use of personal data in emotion-tracking has sparked privacy concerns, with individuals seeking more control over their information.

While there are challenges to overcome, the advancements in machine learning algorithms and computing power are paving the way for more accurate and versatile systems. As we continue to refine and customize these technologies, ethical considerations will play a crucial role in their successful implementation.

Our emotion recognition SDK enables real-time detection of emotions from images or videos, facilitating data-driven decisions. We’ve designed it for easy integration into new or existing applications, with customizable features to meet your unique requirements.

Don’t hesitate to contact us to explore it further.

Explore our emotion recognition software

Tell us about your project and needs today and we’ll set you up with a free trial!